We improve the performance of development teams by introducing new agile practices and removing barriers to their wider adoption.

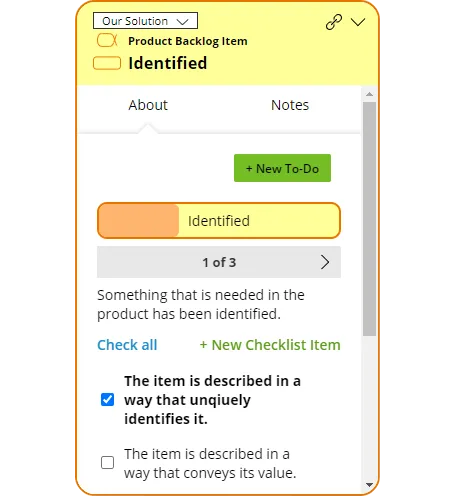

Through the provision of great people, innovative practices, and proven solutions, we ensure that our customers achieve strong business/IT alignment, high performing teams, and endeavors that deliver.

We employ only high-calibre consultants, industry leaders and through our associate network have extensive international coverage. We have helped companies all over the world with agile transformations spanning the US, Europe, The Middle East and Asia.